Enterprise-grade AI Agents

for SRE, CloudOps and more.

Respond faster to incidents and maintenance requests

at a fraction of the human cost.

Respond faster to incidents and maintenance requests

at a fraction of the human cost.

Proudly working with solid sponsors and partners, aligned with our vision of building the new computer system generation.

Deploy our AI Agents in 2 lines of code in your infrastructure whether Cloud-based, On-Premise or Hybrid.

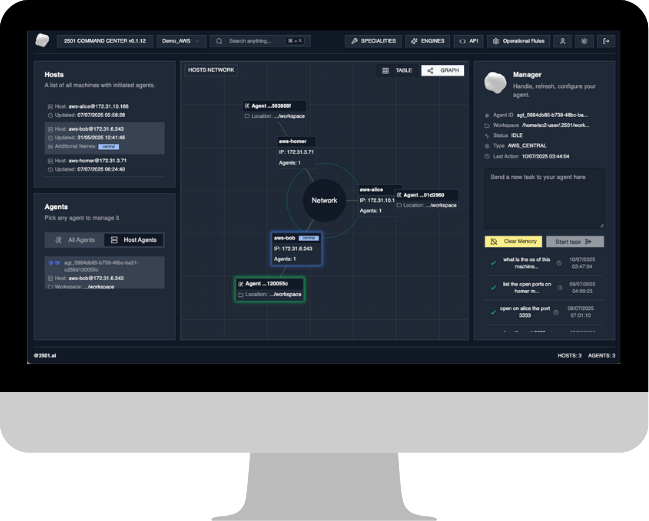

Monitor agents actions in our unified command center, analyse tasks, follow-up any task.

2501 Agents are autonomous, listening to your incidents or requests and will perform from root-cause analysis to remediation in a matter of seconds !

2501 Agents are built to meet the highest security and compliance standards. Fully compatible with SOC 2 and ISO 27001 requirements, they integrate seamlessly into sensitive environments, including on-premise and air-gapped infrastructures (SecNumCloud, EUCS, ...).

Whether you or your customers are operating in finance, healthcare, or defense, our architecture supports strict data governance, access control, and auditability—ensuring trust without compromising speed or autonomy.

.png)

Whether you're in partnership with a flagship LLM provider or willing to use full open-source models. We're compatible with all major models on the market !

We benchmarked hundreds of model permutations and always use the most efficient mixture of models for each specific task, file type, and architecture.

Our customers also benefits from exclusive fine-tuning ownership along the way. Our agents generates data, we improve selected models, and so on...

Our goal is to out-perform what you can see on the market, especially when it comes to flexibility, accuracy, efficiency, and security.

Comparing with common features between 2501 and other similar products available on the market.

Once you share a task with 2501, our AI orchestrator manipulates multiple LLMs to perform the task at a state of the art precision.

2501 leverages cutting-edge academic research in artificial intelligence, combined with operational feedback from our enterprise deployments, to continuously optimize speed, accuracy, and cost.

Our architecture evolves with each iteration—always maintaining compatibility with the strictest requirements of corporate environments, from compliance to performance under pressure.

AI companies often develop single models meant to handle most use-cases on their own. Basically, a jack of all trades, master of none. It often leads to inefficient handling of very specific code, latency due to poor context understanding, and (very) high token usage.

2501 constantly tries new and less-known models for an infinite number of tasks. Our state-of-art AI is self-adjusting, using the best model at all times to output better results for the least tokens necessary.

Follow our latest content on our monthly Newsletter and feel free to have a look at our job offers !